Caching strategy might be one of the most valuable differentiators for Content Delivery Networks (CDNs). Effective caching reduces latency and lowers the load on the master copy of the data, also known as the origin. Poor policies can degrade user experience and accelerate hardware wear, particularly in terms of SSD endurance.

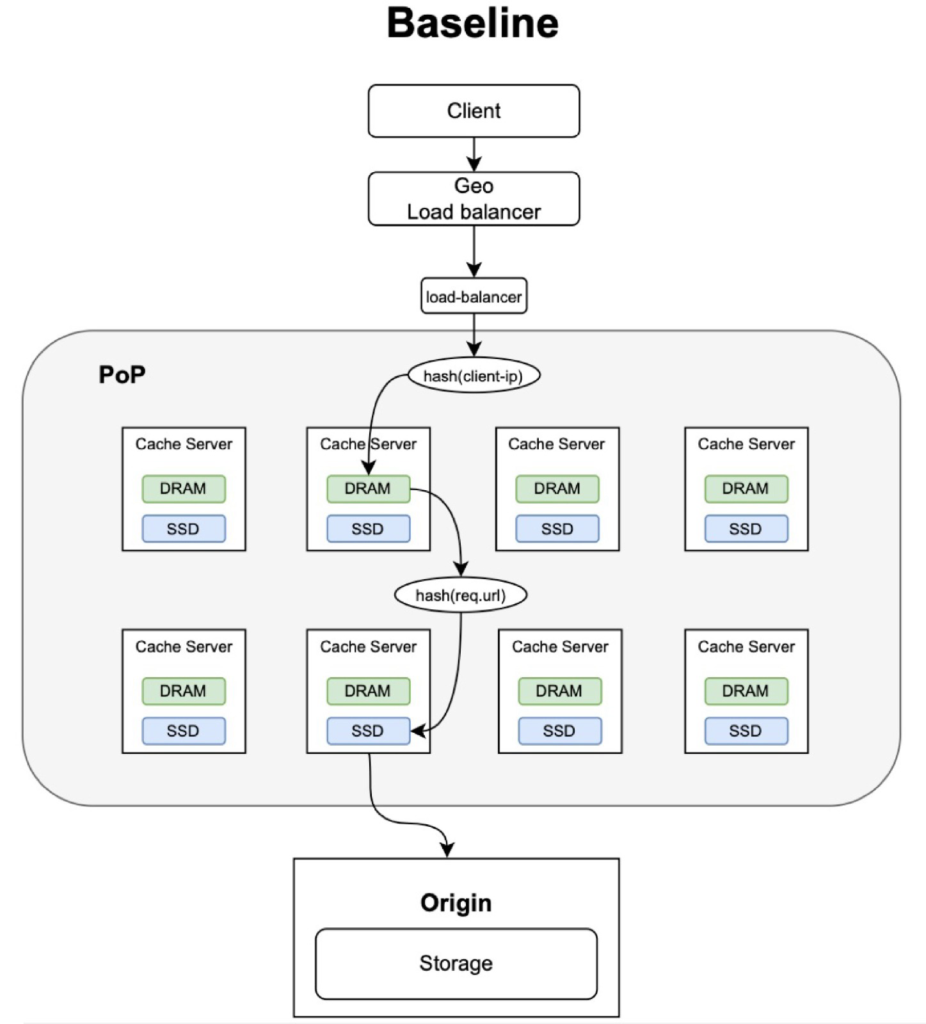

Caching issues are amplifying globally. Minor policy changes can instantly shift terabytes of traffic. Cloudflare’s global network spans more than 330 Points of Presence (PoPs) distributed across over 100 countries. Each PoP consists of arrays of cache servers operating under a local load balancer.

These servers form the backbone of the edge tier, geographically close to users but logically unified by Cloudflare’s Anycast network. Within each PoP, individual servers implement a sophisticated two-tier caching architecture, separating DRAM and SSDs into distinct but cooperating layers.

This article will analyze CDN caches and detail Cloudflare’s “promotion-on-hit” model. It will also show how simulations can reveal performance trade-offs unseen in live systems.

Why are CDNs Necessary?

Every user expects fast, reliable content, no matter where they are. The problem is simple: most applications have a small number of origins and a global audience. Sending every request back to a handful of data centers adds latency, wastes bandwidth, and overloads storage and compute at the origin.

Content Delivery Networks fix that by moving data closer to users. A CDN runs a mesh of edge locations (PoPs) around the world. DNS and Anycast routing steer each request to a nearby PoP, where cache servers serve popular objects directly from DRAM or SSD instead of calling back to the origin every time.

This changes both performance and economics:

- Lower latency – Responses come from a nearby cache rather than a distant origin, cutting round-trip across long-haul links.

- Lower origin load – Cache hits absorb the bulk of traffic, so origins handle fewer, more meaningful requests.

- Lower infrastructure cost – Offloading reads from origin reduces required compute, bandwidth, and storage headroom.

- Higher reliability – When links to origin are slow or unstable, cached content at the edge can still serve many requests.

That “simple” picture of how cache tiers, promotion rules, and eviction policies actually shape hit rate, SSD wear, and overall CDN efficiency.

Fundamentals and Challenges with CDN Caching

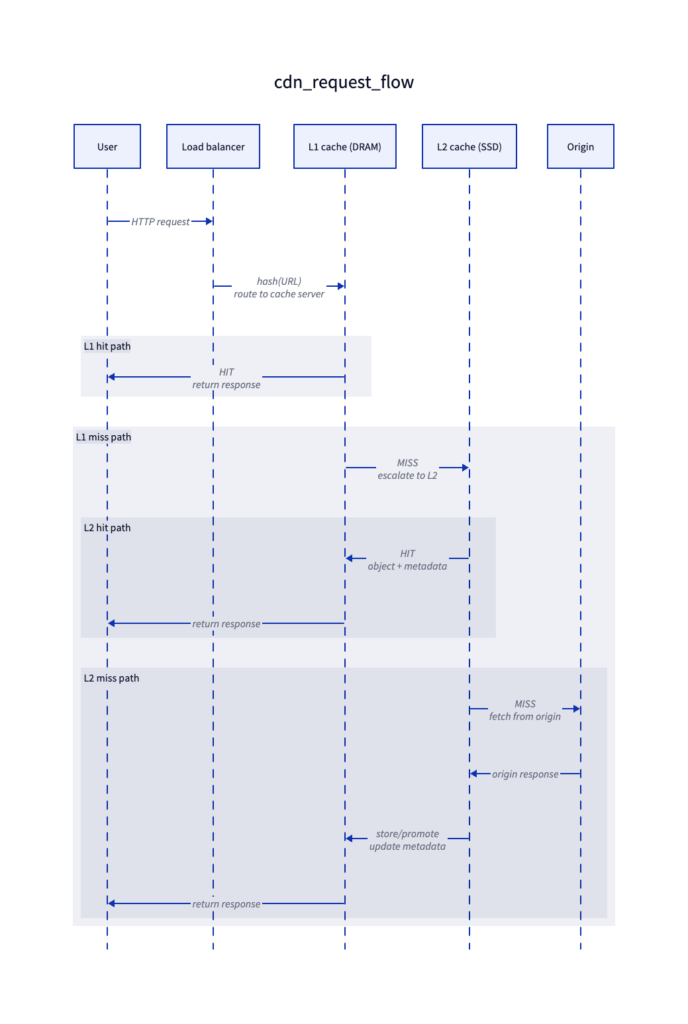

CDN caching is a hierarchy designed to minimize distance, both physical and logical, between users and data. Each point of presence (PoP) serves requests through a hit/miss pipeline:

- Request arrives at a load balancer.

- Request is hashed and routed to a cache server.

- L1 cache (in DRAM) performs a lookup.

- Hit: fetch from the L1 cache and serve the response while updating metadata to record access time and object freshness. Depending on policy, this hit may also trigger promotion to a higher cache tier, ensuring hot objects persist longer in the system

- Miss: escalate to L2 cache (SSD).

- L2 repeats the lookup.

- Hit: return and optionally promote or refresh metadata.

- Miss: fetch from origin, return to the user, and decide what to store.

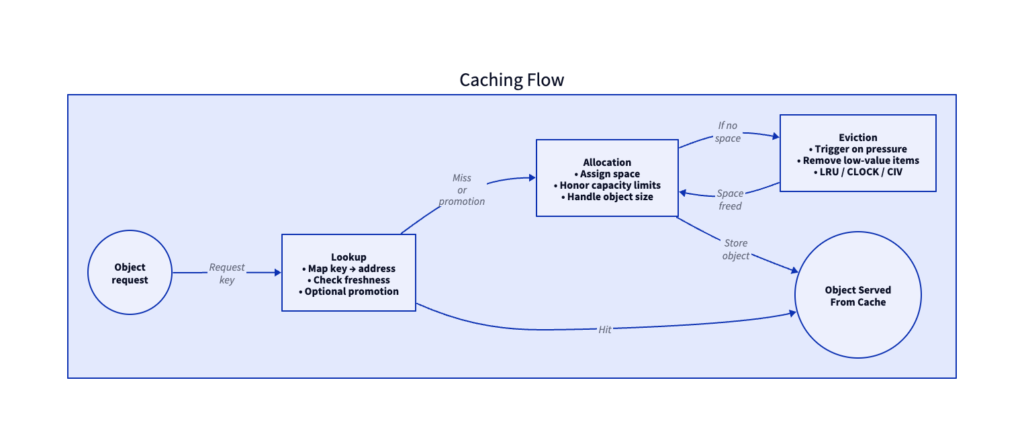

This seemingly simple flow hides complex mechanics. Each cache tier contains three internal modules:

- Lookup – maps request keys (e.g., URL hashes) to memory addresses and validates freshness metadata. A successful lookup can also trigger promotion, moving the object to a higher tier..

- Allocation – assigns physical space for new or promoted objects, ensuring optimal layout in DRAM or SSD. Allocations are constrained by tier capacity and object size.

- Eviction – activates when allocation pressure rises, removing the least valuable entries to free space. Algorithms like LRU or CLOCK rely on access timestamps, while CIV, developed in Magnition’s internal modeling, simplifies pointer operations to reduce overhead in high-throughput environments.

These three processes operate in a continuous feedback loop: lookup informs allocation, allocation triggers eviction, and eviction rebalances the cache hierarchy

Multi-Tier Dynamics

Caches rarely operate in isolation. In multi-tier systems, policies define promotion (moving a frequently accessed object from a slower to a faster layer) and demotion (the opposite).

- More promotion raises hit rates; however, it increases write activity.

- Less promotion reduces wear but risks stale or missing data.

Choosing that balance depends on the workload type. Text and API payloads benefit from small, frequent DRAM hits. Media and large assets lean on SSD capacity.

Working-Set Fit

A cache performs best when its capacity is a consistent fraction of the workload’s active set, the working set ratio. If the cache is undersized relative to the working set, frequent evictions occur before items are reused, known as cache churn. This behavior increases origin fetches and degrades byte-hit efficiency. Conversely, oversizing adds cost without significant improvement once the active working set is already retained

Effects of Load Balancing

How requests map to caches also matters. Random load balancing distributes traffic evenly but loses locality. Hash-based balancing keeps similar URLs on the same cache, improving reuse. The right approach depends on request entropy and cache count per PoP.

Global Edge of Cloudflare

As introduced earlier, Cloudflare’s network of more than 330 locations worldwide, each hosting multiple cache arrays. These PoPs form the global edge responsible for serving requests locally while syncing policies across the network. The network uses an Anycast routing method, where each PoP advertises the same IP prefix so that user requests automatically go to the closest operational data centre. This approach significantly reduces latency and enhances resilience.

At the edge, Cloudflare often functions as both the authoritative DNS provider (in a full-zone configuration) and a reverse proxy for web traffic. The reverse proxy layer terminates TLS sessions, applies security filters (including WAF, bot management, and DDoS mitigation), and then serves cached content from the edge, reducing load on the origin.

Cloudflare’s Anycast network means its IP prefixes are advertised globally, enabling client requests to route to the closest operational PoP, which reduces latency and improves resilience. For caching, Cloudflare supports tiered caching strategies, where data centres are hierarchically grouped (lower-tier vs upper-tier) so that if content is not in the local cache, the system asks upstream cache nodes rather than every node hitting the origin. This reduces origin load and improves hit ratios.

This design ensures fast, secure, and reliable content delivery by combining edge computing, security filtering, and intelligent caching for users worldwide.

Cloudflare Two-tier Caching Approach

Cloudflare employs a unique Two-Tier Caching Strategy that inverts the typical Content Delivery Network (CDN) approach.

Most CDNs use a two-tier system where the faster cache (DRAM) is a subset of the larger, slower cache (SSD), with both layers storing content fetched from the origin. Cloudflare, however, structures its layers differently:

- L1 (DRAM): Transient Cache

- Content fetched from the origin is initially written only to L1.

- L1 holds recent, unproven content.

- If the content is requested again, L1 registers a hit.

- L2 (SSD): Hot Object Cache

- Content is promoted from L1 to L2 only after a configurable number of hits (typically one to four, depending on the Point of Presence, or PoP).

- Promotion signifies that the content is “hot” and merits persistent storage on the SSD.

This inversion means that new content resides first in L1 (DRAM), the transient tier optimized for short-term reuse. Popular content, once validated by repeat access, migrates to L2 (SSD), the persistent tier designed for durability and higher capacity. The real performance gain of either tier lies in avoiding an origin fetch, not in the few microseconds separating DRAM and SSD latency.

The key benefit of this design is a significant reduction in SSD writes. Since the vast majority of requests are for cold objects, writes only occur for objects proven to be hot. This minimizes wear, effectively extending the lifespan of the SSDs.

Comparison: Wikimedia’s Store-on-Miss Model

Wikimedia’s architecture stores every origin miss into both L1 and L2 immediately. It’s simpler but less selective. The cost is higher write volume, shorter SSD life, and different hit-rate dynamics.

Performance and Optimization of CDN

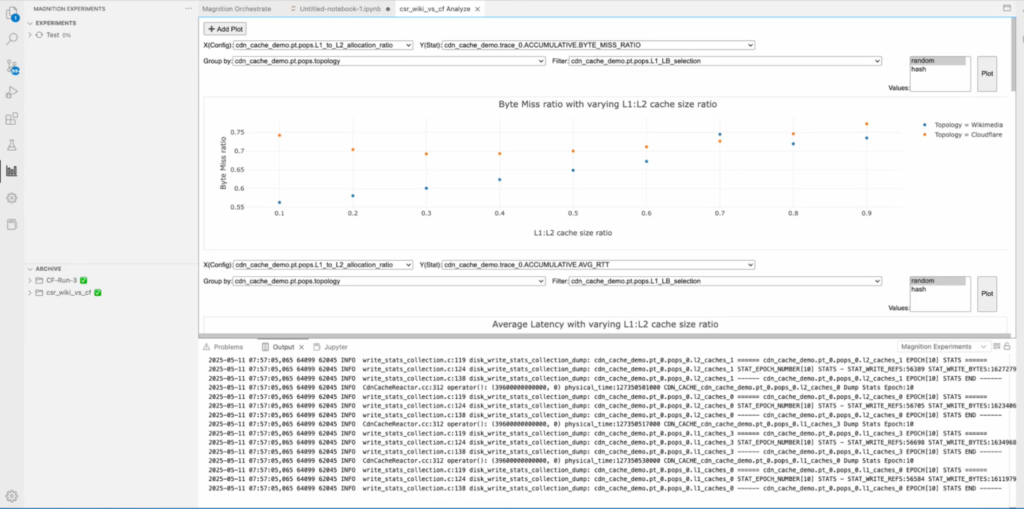

Every cache parameter, L1/L2 size, eviction policy, promotion threshold, and load balancer type creates a massive configuration space. Manual tuning can’t explore it.

Magnition System Designer models these systems as connected components with publishable parameters. Each run replays real CDN traces, typically 60- to 200-GB datasets representing hours of global traffic.

Parameters sweep automatically through thousands of variations:

- L1/L2 ratio – 10/90, 20/80, … 50/50

- Promotion threshold – promote after 1–4 hits

- Eviction – LRU, FIFO, CLOCK, CIV

- Load balancer – random vs hash

- Maximum cacheable object – limit to 10 MB to protect small-object reuse

Each simulation measures byte-miss ratio, origin offload, SSD writes, and effective cost (DRAM + SSD). Results feed into a multi-objective optimization engine that identifies Pareto-optimal designs that offer the best trade-offs between performance and cost.

Simulation in Practice

In a set of Cloudflare and Wikimedia experiments, Magnition System Designer ran over 125,000 simulations using real trace data. Each simulated roughly a day’s worth of requests and produced the following patterns:

- Byte-miss ratio vs working-set size: As caches grew from 1 % to 5 % of the workload, misses dropped sharply, then leveled off.

- Cost vs byte-miss ratio: A small increase in DRAM (up to a 20/80 split) improved cache efficiency by reducing origin dependency, measured as byte-miss ratio, not end-user page-load time.

- SSD writes: Cloudflare’s promotion-on-hit cut writes by more than half compared with store-on-miss policies.

- Origin offload: Optimized configurations sent up to 30 % fewer requests to origin servers under identical workloads.

These results confirm the intuition behind Cloudflare’s design but also quantify its sensitivity. Tiny parameter shifts can move performance curves in opposite directions. Without simulation, CDN optimization is a reactive measure: deploy, rollback. Each live test risks degraded latency and unnecessary wear.

Simulation turns it into a controlled experiment, such as:

- Run real traces against a modeled architecture.

- Sweep thousands of parameter combinations in hours, not weeks.

- Apply Bayesian optimization to focus on variables that matter..

- Evaluate not just single metrics but trade-offs across cost, power, and performance (see Optimizing Content Delivery Networks through Simulations).

This approach mirrors what mechanical and electrical engineers have done for decades: model first, build later. Systems engineers can finally do the same for distributed architectures.

Magnition System Designer identifies optimal configurations, and we have proven that deploying those policies in real environments reproduces the same improvements within a statistical margin. That correlation proves fidelity and validates simulation as a first-class design tool for system architects.

Magnition System Designer in the Loop

Magnition System Designer provides a graphical and programmatic interface to model complex systems like Cloudflare CDN. System designers can:

- Compose architectures visually using predefined components (cache, allocator, eviction engine, load balancer).

- Expose tunable parameters and auto-generate configuration matrices.

- Replay real workloads or synthetic traffic to stress the model.

- Optimize automatically with a multi-objective Bayesian search to minimize both the miss ratio and cost.

For CDN teams, this involves modeling a new caching policy, simulating thousands of what-if scenarios overnight, and deploying with statistical confidence instead of relying on guesswork.

Designing CDNs the Way Engineers Design Systems Through Simulation

Cloudflare CDN illustrates how thoughtful cache-tier design can turn physical limits, DRAM cost, and SSD endurance into controllable parameters. Its promotion-on-hit architecture extracts efficiency from hardware that powers billions of web sessions daily.

The larger lesson is universal: early simulation prevents late firefighting. Modeling cache policies and network behaviors before deployment avoids expensive rework and accelerates optimization cycles.

With tools like Magnition System Designer, system engineers can test CDN architectures the way chip designers test circuits, iteratively, accurately, and at scale. That shift moves distributed-system engineering closer to other mature engineering disciplines: design, simulate, and build.